Here’s the AI history that actually matters for your roadmap—what changed, why it stuck, and how to capitalize without chasing hype.

For a concise primer, see Tableau’s overview of AI’s evolution (A Brief History of AI). Below are the milestones with the most practical impact today.

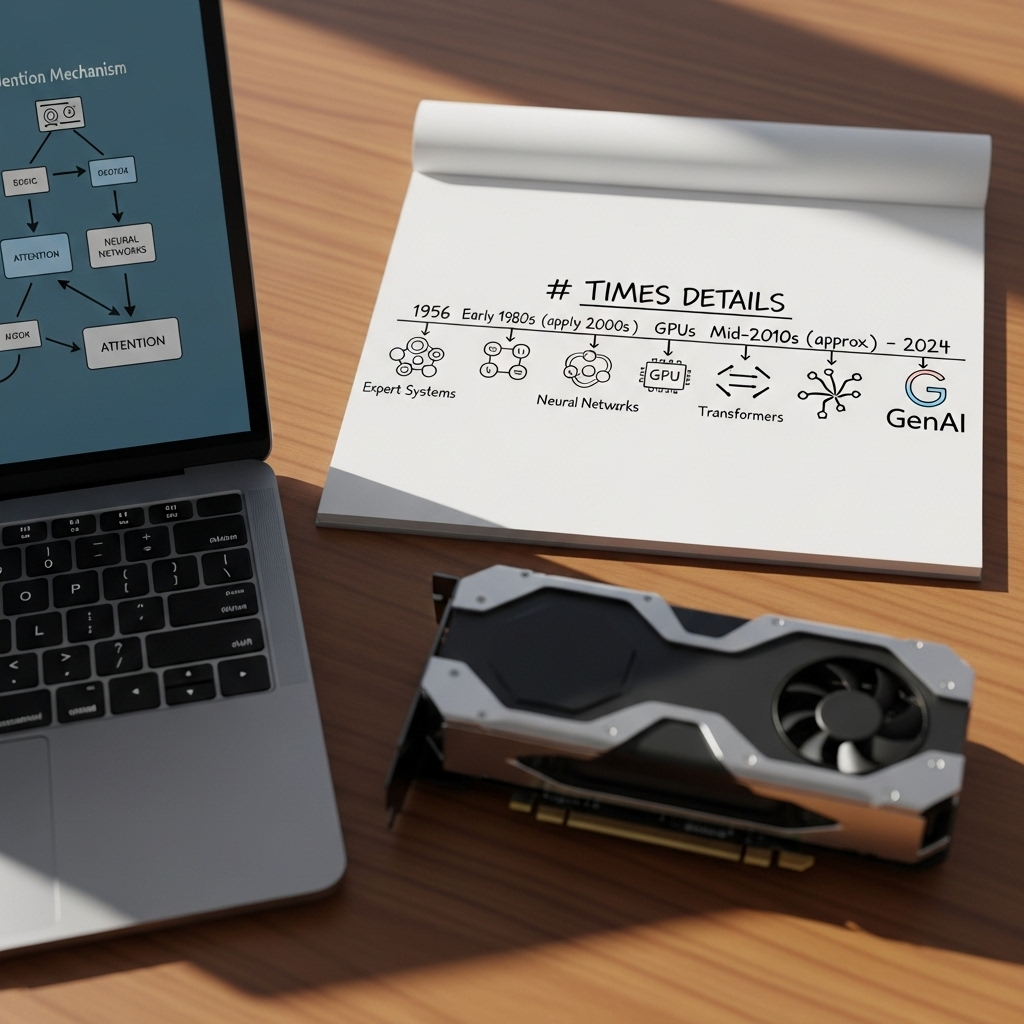

Why this timeline matters

Each phase of AI changed what’s feasible, where ROI comes from, and which skills you need. Knowing the shifts helps you pick the right bets now.

- Prioritize projects aligned with today’s strengths (foundation models, multimodality, RAG).

- Avoid past pitfalls (brittle rules, data debt, one-off PoCs).

- Hire and upskill for the era you’re in—not the one you remember.

8 milestones that explain today’s AI boom

- 1956 — Dartmouth Workshop: “Artificial Intelligence” is coined, setting the ambition for machines that reason, plan, and learn.

- 1970s–1980s — Expert Systems: Rules-based automation shines in narrow domains but proves brittle and costly to maintain. Lesson: hard-coded logic doesn’t scale.

- 1986 — Backpropagation popularized: Training multi-layer neural networks becomes practical, reviving learning systems beyond hand-written rules.

- 1997 — Deep Blue beats Kasparov: Specialized AI wins with compute, data, and search. Signals the power of focused, high-performance systems.

- 2012 — ImageNet deep learning breakthrough: GPUs + big data (AlexNet) trigger a step-change in accuracy, igniting the deep learning era.

- 2017 — Transformers arrive: “Attention Is All You Need” enables parallel training and long-range context, unlocking scale and versatility (paper).

- 2020–2022 — Foundation models and ChatGPT: Pretrained, general-purpose models deliver usable language capabilities for search, support, and coding at scale.

- 2023–2024 — Multimodal + RAG + agents: Models reason across text, image, and speech; retrieval grounds outputs in your data; tool use enables workflows. See broader trends in the Stanford AI Index.

What to do next

- Inventory your “source of truth” data and enable retrieval (vector DB + RAG) for grounded answers and lower hallucination risk.

- Pilot one high-frequency, measurable use case (e.g., support deflection, sales email drafting, analyst summarization) before scaling.

- Adopt a model portfolio: combine a general LLM with a small task model; choose by latency, cost, privacy, and quality.

- Upskill teams on prompt patterns, evaluation, and safety reviews; measure ROI with before/after baselines.

Key takeaway

Today’s edge comes from transformers, retrieval, and multimodality—not rules. Ground your GenAI in your data, pick use cases with measurable value, and iterate fast.

Get weekly, no-fluff AI playbooks in your inbox—subscribe to The AI Nuggets newsletter.