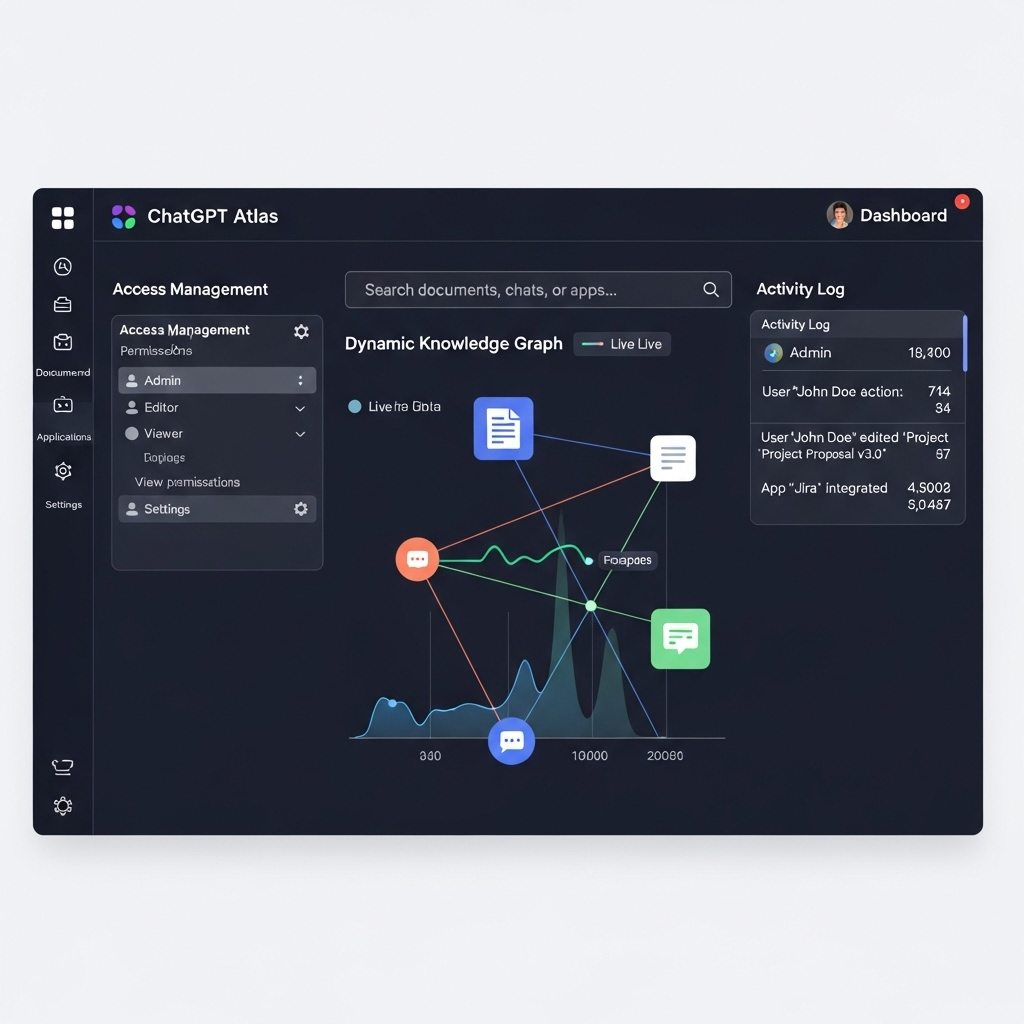

OpenAI introduced ChatGPT Atlas — a new way to organize and explore knowledge inside ChatGPT for faster answers, safer sharing, and better governance across teams.

See OpenAI’s announcement for details: Introducing ChatGPT Atlas.

What is ChatGPT Atlas?

Atlas is designed to map, search, and govern your organization’s knowledge inside ChatGPT and connected sources. Think of it as a structured knowledge layer that helps ChatGPT find the right information with traceability and permissions.

Why it matters

- Faster answers: reduce hunting through docs, chats, and wikis with a unified knowledge map.

- Safer sharing: apply org-wide permissions and auditability to AI-generated results.

- Higher quality: ground responses in authoritative sources with citations and context.

- Operational efficiency: turn knowledge into actions (summaries, briefs, drafts) with policy guardrails.

Quick setup checklist for admins

- Inventory sources: pick the 5–10 highest-value repositories (docs, wiki, tickets, CRM, code).

- Define access: mirror least-privilege roles and data sensitivity labels before connecting.

- Structure metadata: add owners, freshness dates, topics, and systems-of-record tags.

- Enable audit logs: turn on logging, versioning, and retention to trace answers back to sources.

- Test grounding: validate that answers cite approved sources; block untrusted content.

- Set red-teaming: run prompts that probe policy edges (PII, export controls, legal claims).

Practical use cases

- Support: generate customer-ready replies grounded in product docs and past tickets.

- Sales: create account briefs and proposals using CRM notes and case studies.

- Ops: draft SOPs and checklists using policy manuals and change logs.

- Engineering: summarize RFCs, PRs, and incident reports with links and owners.

- Compliance: answer policy questions with citations to the latest approved documents.

Risks and controls

- Data leakage: restrict source scopes; mask or exclude sensitive fields (PII, secrets) by default.

- Hallucinations: require citations for high-stakes outputs; block answers without sources.

- Staleness: set freshness SLAs; auto-expire or flag outdated content in prompts.

- Access drift: sync permissions with identity provider; run quarterly access reviews.

- Over-reliance: keep a human-in-the-loop for legal, financial, or safety-critical decisions.

Metrics to watch

- Grounded answer rate: percent of responses with valid citations to approved sources.

- Time-to-answer: median seconds saved versus manual search.

- Deflection rate: tickets or requests resolved without human escalation.

- Policy violations: blocked prompts/outputs per 1,000 requests.

- User trust: thumbs-up rate and comment feedback on accuracy.

Takeaway

Treat Atlas as your AI-ready knowledge layer. Start small with high-value sources, enforce least-privilege access, require citations, and measure outcomes before scaling.

Get weekly, practical AI briefings. Subscribe to The AI Nuggets newsletter.